Distributed models for flight approval are much harder (than you think)

U-space is Europe’s name for their UAV Traffic Management Systems (UTM), extending the established Air Traffic Management (ATM) duties towards unmanned or unpiloted aircraft, ranging from small drones to large passenger vehicles for Urban Air Mobility (UAM) applications. In today’s ATM, humans make most decisions. UTM, however, is designed to be digitalized and automated from the ground up. While ATM instances manage thousands of flights daily, UTM aims at orders of magnitude more.

U-space is still work-in-progress. In Europe, the high-level regulation (EU 2021/664) has been in force since May 2021, but it still needs amendment by more detailed regulations. Once U-space is fully operational, it promises to provide services like flight approvals, traffic information (about manned and unmanned aircraft), remote identification of aircraft, airspace management, weather updates, and geo-awareness. It is expected to enable efficient, automated, and safe operation of large and diverse fleets of drones. Access to airspace is supposed to be fair, cheap, and thus not dominated by large companies. Finally, it shall at least match commercial civil aviation’s excellent level of safety. In short, U-space is intended to be the fundament that keeps anything from small delivery drones to large electric passenger drones running smoothly, much like ATM is today for manned aviation – but at a much larger scale, lower cost, and higher quality.

An international, collaborative effort is underway developing the technical standards for U-space, involving the FAA, EASA, and other organizations. Creating such a complex system is challenging. The system’s design goals are unknown as we don’t know yet how the drone ecosystem will look like in 20 or 50 years. Already today, it is massively different than what we expected half a decade ago.

Centralized? Distributed? Federated!

A central decision taken very early in the project was to use a federated architecture. Federation is an approach to designing complex systems that

mixes distributed and centralized aspects. Federated systems allow a high level of autonomy of service providers while defining precise rules and protocols for interaction between them. End-users can select a service provider from a large pool of offerings based on quality, features, cost, etc.

This works as follows: Users interact with the system through a number of service providers. Service providers operate independently, storing the data that is relevant for their domain of operation. Data can be shared and synchronized between service providers, i.e., when a user initiates an interaction that affects the domain of other service providers.

To initiate such a synchronization, a service provider must first identify with which peer a synchronization is needed. For this, a central federation server is queried, which maintains a directory of service providers. The server returns the contact details of the service provider, matching the query. The direct synchronization can then start, using a standardized protocol for data exchange.

Federated systems can have a number of benefits, including resilience, robustness, scaling effects, and lower cost. In general, it is a good choice if there is a clear benefit in having a large number of service providers.

A good example of a federated system is email, invented in the early 70ies: The data (that is, emails, including headers and attachments) is highly distributed among millions of email servers like Gmail, Yahoo, or smaller corporate or private servers. Emails are synced between servers only when needed, that is when a user sends an email to an address, not on the same server, e.g., from alice@gmail.com to bob@yahoo.com. To find the right server, the sender performs a lookup in the Domain Name System (DNS). DNS is a hierarchic system to globally organize internet names such as ibm.com. Domains can contain an entry for an email server; hence the sender can query DNS for the correct server to contact. Once the recipient is known, the sender contacts it directly and sends the email using a protocol called Simple Mail Transfer Protocol (SMTP), defined precisely in an open

standard.

Email was the original killer app for the internet, long before we started browsing the World Wide Web or posted cats on Facebook. Email has worked exceptionally well for decades, scaling from a handful to millions of servers, thanks to a few clever, simple, well-defined protocols – and the federated structure that made it possible to scale. But it has also failed to innovate: Large attachments, end-to-end encryption, message integrity, and authentication, receive notifications, guaranteed delivery, etc., are still not available to the average user, even though there were major attempts and a clear need to add them. Today, we’re using centralized, proprietary services like Signal, WhatsApp, or Telegram for some of these features.

Federated U-space

Why does U-space use a federated design? The traditional, national ATM providers (also called ANSP) with their state monopolies were perceived as dead ends: They have not innovated or invested enough in the past, nor did they appear to be capable of doing so. Decision-making was (and is) still very much human-in-the-loop (on the ground and in the cockpit) and thus hard to automate and scale. Clearly, this was not the model for U-space.

The idea was thus to start with the opposite of a monopoly: A competitive environment. Competition would lead to innovation, a high level of safety, low prices for users, and it would scale very quickly. If we’d succeed in creating a vibrant ecosystem, then U-space could instead even become the sandbox or role model for the future ATM or replace it altogether.

In this envisioned ecosystem, many U-space Service Providers (USP or USSP) are to collaborate, sometimes in the same area, offering different features, services, and specializations tailored to their customer base. The many USPs would communicate over the internet. To synchronize, they would use the Discovery and Synchronization Service (DSS), which provides the means to find all other USP operating in the same area, comparable to DNS in the above email example. DSS does not store UAV flight data itself. Crucially, each USP autonomously decides which data to share with or ask from other USPs.

Not all parts of a UTM need to be federated. For instance, weather information, geo-awareness, or fleet management functions can be offered independently by each USP. Synchronization is mainly needed for flight approvals and collision avoidance. These are fundamentally similar problems on a different time scale: While flight approvals look ahead minutes to hours ahead, collision avoidance has a horizon of seconds to minutes. The concept is quite simple: No two vehicles may occupy the same airspace at the same time. Safety buffers are applied in both space and time to deal with uncertainties. If a conflict is predicted, then the plan of at least one of the vehicles must be changed. Flight approvals compare flight plans; collision avoidance compares actual trajectories.

This is a crucial aspect for the safety of U-space: The certainty with which two vehicles can be prevented from occupying the same airspace at any given time.

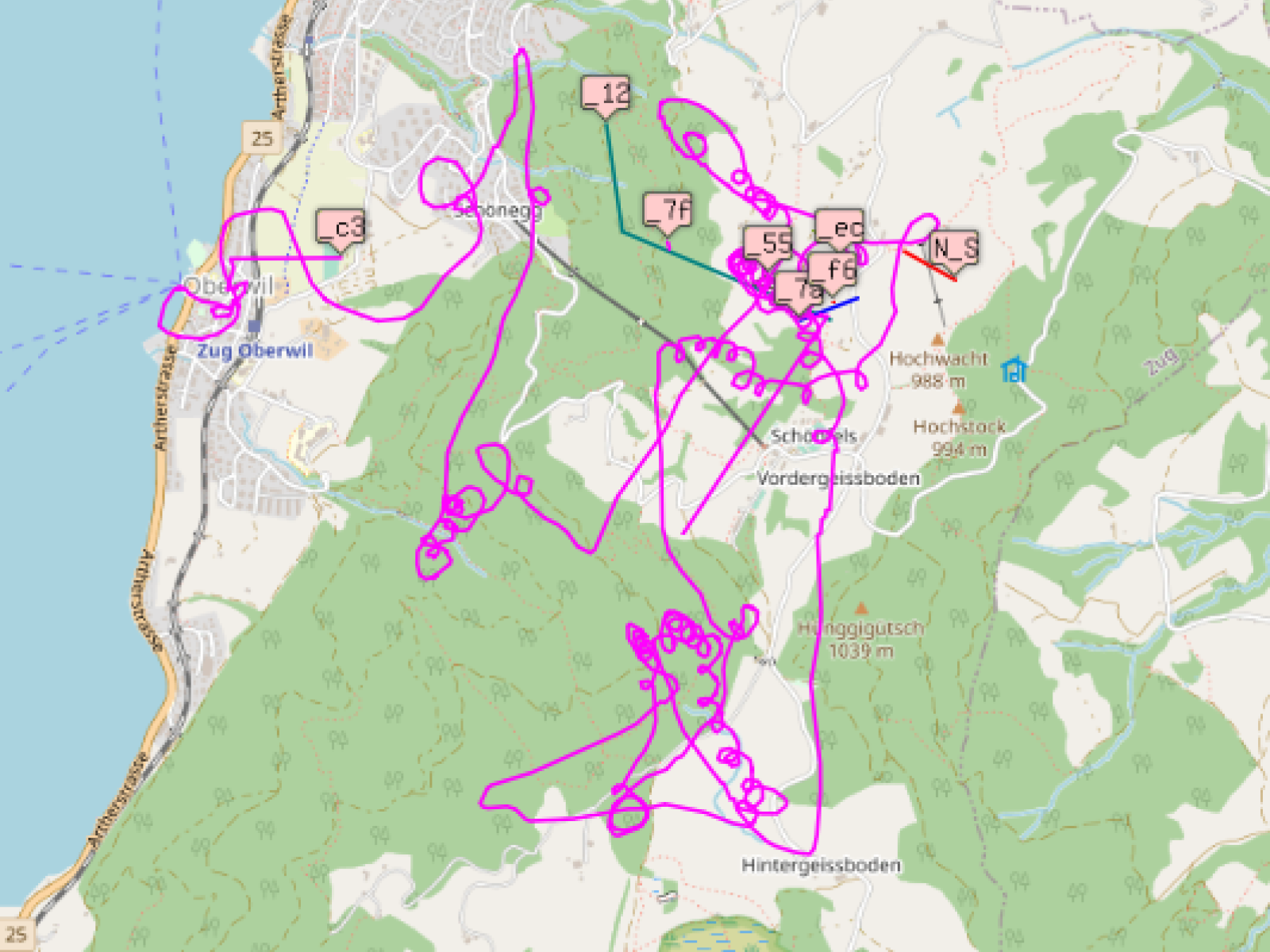

Federated U-space attempts to solve this collaboratively: A USP checks for conflicting flight plans by contacting nearby USPs. If a conflict is detected, the flight plan is modified until it is free of conflict. For low traffic densities, this can be sufficient, but the more USPs and the more vehicles, the harder it gets to maintain a consistent view of the airspace.

Distributed systems are hard

Distributed systems have some surprising

pitfalls that are not immediately apparent. Notably, the intuition we have from centralized systems is often misleading. In the following, we point out some of the problems that arise from distributed U-space along with the topics of technology, safety, and business:

- Starting with technology, a sudden increase of network latency or complete failure may lead to a USP being detached from the internet. When this happens, flight information and approvals can no longer be exchanged between this USP. How do the other USPs deal with this? Can they simply ignore it, or do they need to wait until the connection comes back up? If the former: What impact does it have on safety, and how to mitigate? If the latter: What is the effect of this on the availability of U-space, given that this adds many single points of failure?

- The next challenge is maintaining transactional integrity: This describes a property of any database to execute a change without conflicting with other changes that may execute at the same time, thereby corrupting the database. For U-space, this would mean that a user can file a flight plan without ending up with another conflicting flight plan being filed somewhere else at the same time. This is a fundamental aspect of U-space: If integrity is lost, so is safety, as conflicting flight plans may get approved. Integrity is almost trivial for centralized databases but vastly more difficult for distributed systems.

- Suppose the above problems are infrequent enough that we can try to work around them (this may indeed be feasible initially when traffic volume is low). Then, since inconsistencies are inherently accepted, there needs to be a mechanism to recover a consistent state reliably. This is the problem of finding consensus: Let’s assume there are two flight plans approved by a different USP. One USP clearly needs to delete or change its flight plan and inform the operator, but which one? Consensus protocols are widely discussed today in the context of cryptocurrencies. Unsurprisingly, they are hard to design, implement, and operate. Some are also really expensive and have other negative consequences, such as high transactional latency.

- The more USP there are, the more we will also have a problem of trust: Is everybody playing by the rules? Who is to judge this? Non-compliant behavior may be intentional: There are, after all, commercial benefits to bending the rules. More often, though, it will be bugs and human errors that lead to non-compliance. This will happen, so we should at least have mechanisms to detect it and deal with it after the fact.

- Solving the technical problems is complex, making it harder and more expensive or commercially infeasible for companies to become a USP in the first place. Do we risk the opposite of what was intended: U-space is dominated by a very small number of financially very potent players?

- The commercial aspects of U-space are still quite murky overall: Airspace is assumed to be free to reserve, and users only pay the USP a small service fee. But should airspace in high demand not be more expensive to use? Getting airspace pricing right is extremely important since it helps to create the right incentives and behaviors. While we cannot yet calculate the cost of operating U-space nor know the commercial potential, there should at least be a coarse pricing model: What should be charged, how is the price determined, who pays whom, and how transparent is this? How can such price finding work in the distributed setup? Can airspace be resold or auctioned off, as is common practice in ATM?

- Finally, the hard problem of fairness: Access to U-space is supposed to be granted under fair conditions. Small users shall not be bullied by big corporates. But what does this mean? There is no objective standard for fairness, no arbitration authority, no clearly designed system of incentives. Who will reprimand or sue users in case of abuse? Apart from the (lack of a) price for airspace, are there any other incentives that deter users from behaving unfairly? How would a user even detect his own unfair behavior? Fairness is far trickier in a decentralized environment.

Email, by the way, has developed interesting solutions to all these problems: Network degradation is not an issue almost by definition since there is no urgency: If the network is down, the server simply tries again later, making it robust. Transactional integrity and consensus finding are not needed as a whole, only between two mail servers, where it is easy to achieve. The problem of trust is the hardest, currently mitigated by a complicated system of individual black and white lists, sophisticated systems for detecting malicious behavior, and historical data. As an example: If a mail server continuously sends large amounts of spam to Gmail, then it will be blacklisted eventually. New mail servers, on the other hand, need to first gain Gmail’s trust by behaving properly. This can take years.

Conclusion

Designing the next-generation traffic management system from scratch is a genuinely hard task. The decision to use a federated, decentralized architecture was made with good intentions: To enable competition and allow the USPs to operate independently and with responsibility. But it introduces a level of complexity that may lead to the opposite result: The technology becomes so complicated that only a handful of (very potent) providers are able to participate, with potential conflicts of interest (cross-subsidization, governmental influence).

Also, the complexity may not be needed: A vibrant ecosystem is easily thinkable if only the aspect of safe flight approval is centralized: Uniquely reserving a slab of airspace for a defined time span. Everything else can be decentralized or delegated to individual USPs. Quite possibly, we would see a more diverse ecosystem.

To be clear: None of the challenges described here are unsolvable. But distributed systems are much harder to get right, especially when we have high expectations in terms of safety, reliability, robustness, formal certification, and cyber risk security. Realistically, we should probably expect several improvement cycles with this new technology anyway. The risk we face is that these cycles are too slow now or may not happen at all. We may end up with a dysfunctional, or, less pessimistically, too complex and expensive solution, in which only very few large players can afford to compete, or national systems dominate, similar to ATC.

Crucially, it may be hard to recover from such a mess. Consider Email again: The current deficiencies are not from a lack of proposals on how to solve them but a reluctance of service providers to invest and adopt the new standards: There is simply not enough benefit initially. Introducing fundamental changes may similarly fail for U-Space when there is not enough incentive for the individual supplier to do so. It only works if the majority adopts the change, which is increasingly hard to achieve in a federated setup.

There is so much we still don’t know yet about the future drone ecosystem. Manned aviation needed decades of continuous improvement to develop the highly efficient and extremely safe mechanism that we enjoy today. A similar approach for the development of U-space would mean a simpler, less ambitious, less distributed design with fewer functions, aimed at being practical and commercially feasible today.